A nine-pound Cavapoo to be precise. Momo (the coder) made games, not important security patches or anything. To get to the vibe coding, the owner had to solve some delightful engineering problems first, like finding a keyboard that could withstand paws and build an automated treat reward dispenser to get Momo to smash some keys. Getting Claude to turn the random keystrokes into a game almost felt incidental to everything else.

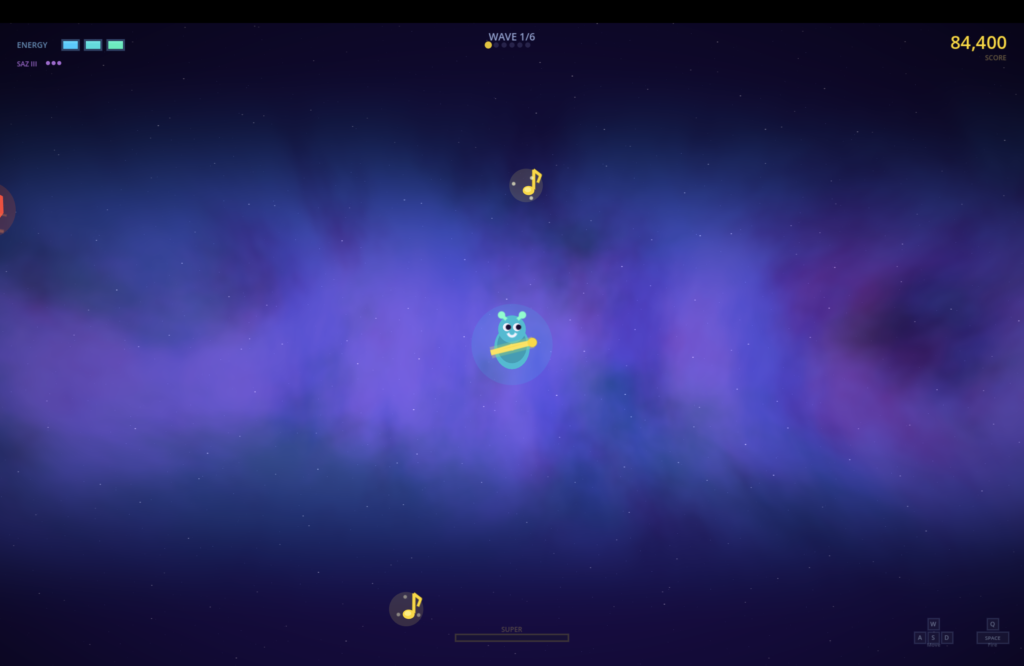

Above is a screenshot from the game, which I downloaded and played. I did not beat the final boss!